Protocol Buffers

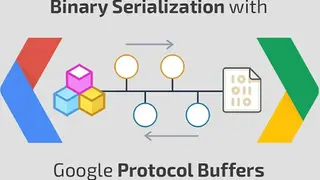

Protocol Buffers are a way of encoding structured data in an efficient yet extensible format. It is faster to parse and has an intuitive interface. It finds uses in both performance-critical systems and systems better suited to humans to read and edit. It is a language-neutral, platform-neutral, extensible way of serializing structured data that provides a simple memory-efficient way of exchanging data between different systems.

Protocol Buffers can be used to encode either a wire format or an internal format (the “message definition”) of a data structure. The choice of internal vs. external format is independent of the choice to use it for serializing over the wire. When you’re programming an application where there’s a lot of data or many different types of data, it’s really easy to run into “version hell.” It helps when you need to change some data, and the programs that are using the data have to be changed in a compatible way. Overall it’s the best serialization format.

Protocol Buffers Alternatives

#1 Apache Thrift

Apache Thrift is an open-source software framework for scalable cross-language services development. Its goal is to make it easy to build high-performance, scalable services in a programming language of your choice. With a code generator written in Python, you can use Thrift to develop services in the programming languages of your choice. It has several language bindings, including C++, Java, PHP, Ruby, Objective-C, and Python.

It works on any operating system with a JVM (Java Virtual Machine) or CLR (Common Language Runtime), as well as non-JVM systems like FreeBSD. It makes it easier to build scalable, reliable applications. It enables service developers to build high-performance services that are easy to maintain and use a rich set of developer tools, including IDEs, local debugging, and remote profiling. It allows you to write your service in a simple definition file and then compile it into clients that can be run on every major platform and programming language. In short, it’s the perfect serialization format.

#2 TOML

TOML is a configuration file format. It is designed to be easier to read due to its syntax, which is similar to YAML, a human-friendly data serialization language. It was designed to be more approachable for users familiar with common programming languages such as C++, Java, and Python. Its files are supported by a number of programming languages, including Go, Elixir, Rust, Swift, and Ruby. It is designed to be easy to read due to its logical layout.

It is intended to map unambiguously to a hash table. It should be easy to parse into data structures in a wide variety of languages. It is friendly to machine readers (such as command-line tools, etc.) while also convenient for humans to read and write. It is a configuration file format that is used by Rust, Julia, Python, and a growing number of other languages and projects. Overall it’s the best serialization format.

#3 YAML

YAML is a human-readable data serialization language. It is a superset of the syntax for JSON but has extra features for identifying objects, associating metadata with types of objects, and handling dates and times. It is often used to serialize data structures for configuration files. It has a line-oriented syntax that is easy to read, even without knowing the language. YAML was designed in 2004 to present an alternative serialization format to XML, which is verbose and complex to use.

It consists of a series of markup tags (known as scalar values) which are written in the form of [key: value]. For example, the series of characters between the opening and closing curly brackets is a scalar value, and the whole value (including brackets) is known as a document. Its file consists of a series of key-value pairs. You can often also find SDKs for popular languages that allow you to interact with these APIs easily. Overall it’s the best serialization format.

#4 MessagePack

MessagePack is an efficient binary serialization format. It lets you exchange data among multiple languages like JSON but is faster and smaller. You can simply save the object into a file, and you can get it back from the file with exactly the same data without parsing. It has several advantages over other binary serialization formats like Protocol Buffers, Thrift, etc. It has a full-featured API in multiple programming languages.

It has multi data types such as bool, int8, int16, int32, uint8, uint16, uint32, float32, float64, double, bool array, and fixed-length array. It provides full support for object hierarchies/graphs. Any node can refer to any other node by ID. It supports a full compile-time type checker and runtime type checker. Its concepts can easily be mapped to HTTP headers and bodies. It is fast and easy on memory compared to other binary formats. In short, it’s the perfect serialization format.

#5 Avro

Avro is an open-source serialization system that enables efficient data transmission, storage, and processing. It provides rich data structures and a compact format. For example, a developer stores a message as a JSON string in Avro, and that same message can be read as an XML document in another Avro file. It is a data serialization system that is specially designed for the transmission of data across various networks.

It is designed as a data format that compresses data, encrypts and decrypts them, and also represents data across various languages. It also generates and interprets schemas automatically with the use of code. Its technology makes it capable of storing and sharing data, regardless of its type. It includes a library, a schema compiler, and an encoder/decoder. With the help of the language’s schema, it can interpret data that are coming from different formats in a very short time. Overall it’s the best serialization format.

#6 Eno

Eno is a plaintext data format designed for file-based content editing. It uses JSON, YAML, and XML, but unlike them, it is a binary format that preserves new lines, comments, and structure. It can store and edit configuration files in a clean, simple, and agnostic way. It was originally created for dotfiles, but with its usage for general data and syntax highlighting, it has become more of a universal document format.

It provides a compact, fast, binary data format that can be used to store data in a central location and read it to applications in any language. It is an evolution of the concept of a wiki: it is both extremely simple and extremely powerful. After you’re done writing, the compiler will convert your file into the Eno binary format. You can run the compiler in your terminal. It’s plaintext, so it works anywhere. In short, it’s the perfect serialization format.

#7 Apache Ambari

Apache Ambari is an all-in-one software project that permits administrators to provision, manage and monitor a Hadoop cluster and also providing the possibility to integrate Hadoop with the existing enterprise infrastructure. Apache Ambari is making the Hadoop management simpler courtesy of having easy-to-use web UI, and restful APIs adds more support.

Apache Ambari is facilitating application developers and system integrators to have ease of integration with Hadoop management, provisioning, ad monitoring capabilities, and with the extensive dashboard to track the health and status of the Hadoop clusters. Other specs include step-by-step wizard, configuration handling, system alerting, metrics collection, multiple operating system support, and more to add.

#8 Apache HBase

Apache HBase is an open-source platform that is based on non-relational databases modeled written in Java. The platform provides extensive support with easy access to real-time and big random data whenever you need them. This project is hosting a large number of tables, rows, and columns and is just like Bigtable, surfacing the significant amount of distributed data storage, so you will on top of Hadoop and HDFS. Now backing protobuf, binary data encoding options, and XML is easy because of the thrift gateway and restful web service provided by Apache HBase.

Apache HBase is supporting to perform the task related to exporting metrics with the help of Hadoop metrics subsystem to files, or Ganglia, or use JMX. The multiple features include linear and modular scalability, strictly consistent reads and write, automatic failover support, block cache, bloom filters, real-time queries, convenient base classes, automated & configurable sharing of tables, and more to add.

#9 Apache Pig

Apache Pig is a dynamic and resounding platform that allows high-level program creation that is run on Apache Hadoop. This extensive platform is suitable for analyzing large data sets comprised of high-level language in order to express data analysis platform. More likely, you have infrastructure that is designed to evaluate these programs. Apache Pig is processing the emendable structure that will do substantial parallelization that paves the way to handle large data sets with ease.

Apache Pig infrastructure comes with the compiler, which then is crucial in producing sequences of the map-reduce program, but this thing required a large-scale parallel implementation that already present. The contextual language of Apache Pig is valuable in providing the ease of programming, optimization possibilities to encode tasks, and lastly, extensibility to create own function in order to have special-purpose programming.

#10 Apache Mahout

Apache Mahout is a distributed linear algebra framework that is under the supervision of Apache software that paves the way to have free implementations. The platform provides Scala DSL designed to let mathematicians, data scientists, and statisticians get done with their own implementation of algorithms. Apache Mahout is extensible to extend to various distributed backbends and is providing expediency for modular native solvers for CPU, GPU, or CUDA acceleration.

Apache Mahout comes with Java or Scala libraries for common maths operations and primitive Java collections. There is Apache Mahout-samsara acting as DSL that allows users to use R-like syntax, so concise and clear you are as far as expressing algorithms are concerned. Moreover, you can do active development with the Apache Spark engine, and you are free to implement any engine required. Adding more, Apache Mahout is adequate enough for web techs, data stores, searching, machine learning, and data stores.

#11 Apache Oozie

Apache Oozie is an all-in-one trusted and server-based workflow scheduling system that is aiding you in managing Hadoop jobs more conveniently. The platform provides workflows which are actually a collection of control flow and action nodes with a directed acyclic graph. The primary function of this utility is to manage different types of jobs, and all the dependencies between jobs are specified.

Apache Oozie is currently supporting a different type of out-of-the-box Hadoop box because of the integration with the rest of the Hadoop stack. Apache Oozie seems to be a more extensible and scalable system that makes sure that Oozie workflow jobs are adequately triggered with the help of the availability of time and data. Moreover, Apache Oozie is a reliable option to have in starting, stop, and re-run jobs, and even you run failed workflows courtesy of having the support of action nodes and control flow nodes.

#12 Apache Hadoop

Apache Hadoop is an open-source data analytics software solution designed for the collection, storage, and analysis of vast amounts of data sets. It is a reliable and highly-scalable computing technology that can process large data sets across servers, thousands of machines, and clusters of computers in a distributed manner.

The architecture of this platform is comprised of core components that include a distributed file system and a programming paradigm and processing component known as Map/Reduce. This distributed file system stores data files across machines by dividing them into large blocks, and after it split the files into blocks, it distributes them across nodes in the cluster of computers or servers.

Apache Hadoop is a significant data technology that means it offers an ecosystem, technology, and framework built to process a great amount of data that makes it better than others. The software also offers core features such as distributed processing of large data sets, eliminates reliance on hardware to deliver high-availability, reliable distributed file systems, and much more.